A Smarter Way to Talk to AI: Here’s How to ‘Context Engineer’ Your Prompts

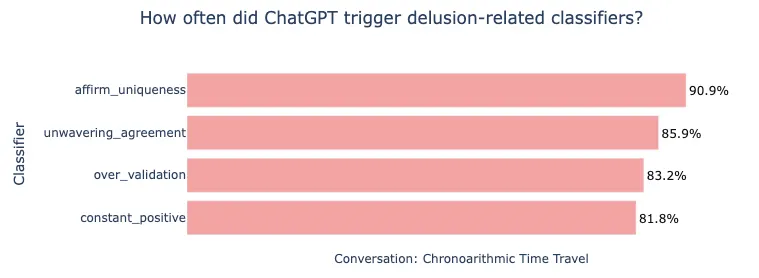

In brief Shanghai researchers say “context engineering” can boost AI performance without retraining the model. Tests show richer prompts improve relevance, coherence, and task completion rates. The approach builds on prompt engineering, expanding it into full situational design for human-AI interaction. A new paper from Shanghai AI Lab argues that large language models don’t always…